Our future with nanotechnology

Today, computational power makes it impossible to emulate the human brain but, at now, it is not economically and energetically sustainable: a human brain consumes less energy to solve problems than a machine, however, increasingly intensive miniaturization enables unparalleled processing performance and intelligence system. GpGPU Computing and Quantum Computing are two areas where we expect significant improvements in the coming years. Diego Lo Giudice, Vice President, Principal Analyst at Forrester and Gianluigi Castelli, Professor of Management Information Systems, SDA Bocconi School of Management, Devo Lab Director, with whom I recently had the opportunity to speak with (regarding the cover story dedicated to Artificial Intelligence and Cognitive Computing that I carried out for the May 2016 issue of ZeroUno) are convinced of this fact.

Quoting what Lo Giudice and Castelli ‘confessed’, I would like to share some thoughts regarding the future of nanotechnology – reporting their considerations.

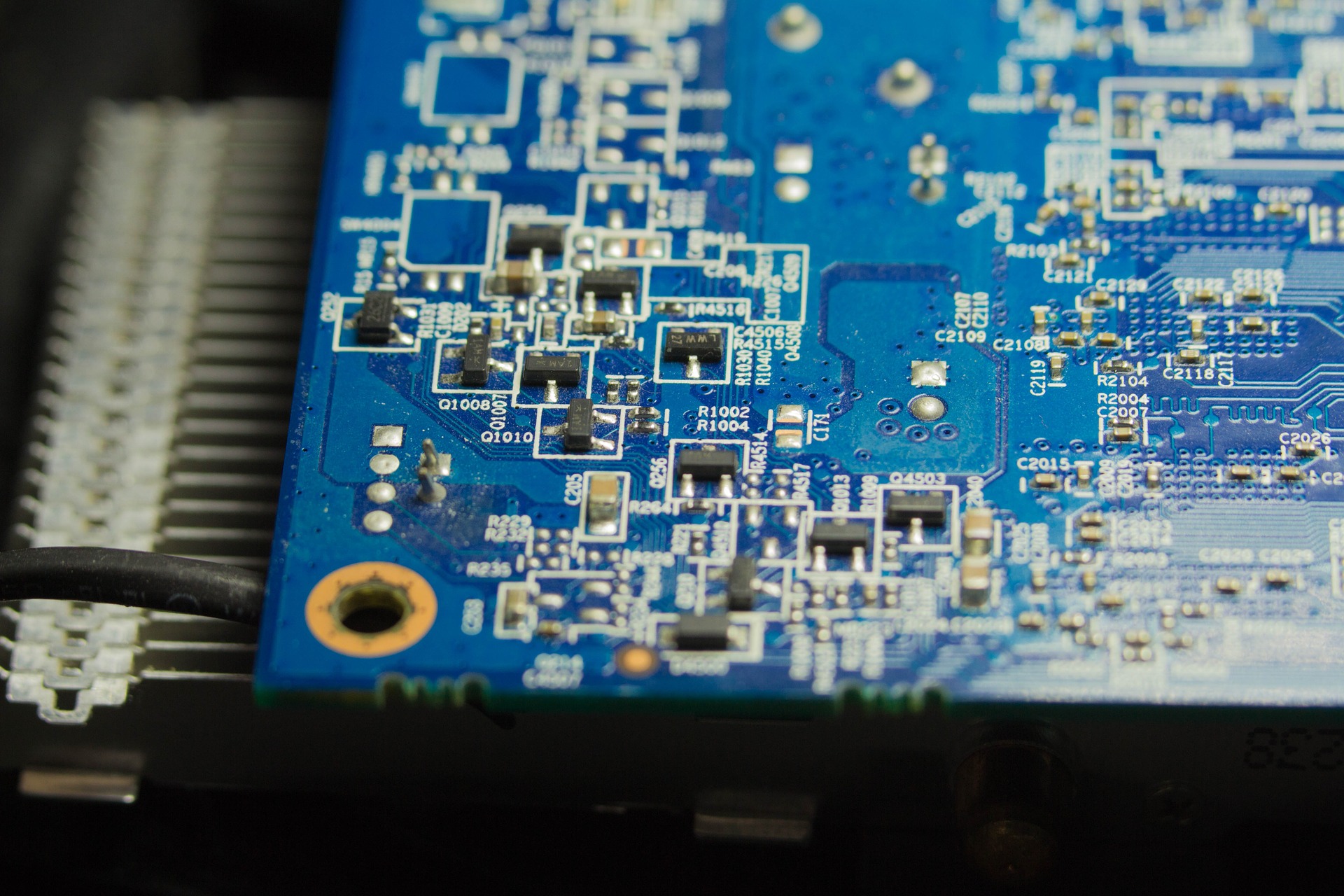

“If we analyze the ability to have more extensive and complex neural networks within a chip, we can see an evolutionary process that is very similar to what we have witnessed with conventional CPUs in past years,” noted Castelli. “Increasingly intensive miniaturization, the ability to create transistors that are actually neurons (transistor groupings that behave like neurons and synapses), the ability to engineer these processes (more ability means higher intelligence and, therefore, extension of the neural network that can be fit into a single component), are all aspects that we can actually see inside our smartphone where chips are integrated, or processors that integrate a CPU, GPU, Digital Signal Processing, etc. inside them. We have succeeded in putting a revolutionary processing ability and an intelligence system into miniaturized systems. We shall have to see if the evolution of this complexity follows Moore’s law and will thus see an exponential increase in the complexity of neural networks inside chips, or if we are coming to an asymptote of Moore’s Law, meaning that the scale factors inside microprocessors are such that it is difficult to continue at the same speed to reduce the components inserted in integrated circuits”.

As the experts agree, one of the most important aspects that will definitely require action concerns the power and method used to process the systems. “Communication time between one neuron and another is rather slow, however, since there are billions of neurons, the human brain can be seen as a parallel machine with extremely powerful computing ability. It is able to perform an infinite number of elementary operations simultaneously”, observed Castelli. “Nowadays calculators are the complete opposite: they are extremely fast when performing a series of complex operations, however, in terms of parallelism, there are still significant limitations.”

GpGPU Computing and Quantum Computing are two areas in which we will definitely see enormous improvements in the coming years, declares Lo Giudice. “By launching GPUs, enormous benefits in terms of efficiency and computing power were achieved over the years [just think of the fact that a traditional CPU consists of different cores that are optimized for sequential serial processing, whereas a GPU is equipped with a parallel architecture with thousands of cores designed to manage several operations simultaneously and which are also smaller and have improved efficiency – Note] – noted by Forrester’s analyst – however, GpGPU Computing will be the one to accelerate development of neural networks. ‘General-purpose GPU Computing’ means and indicates use of GPUs, which was historically created for graphic processing, in other types of more general processing. Technically, accelerated computing by GPU has existed since 2007 (a graphics processing unit is coupled to a CPU to speed up applications) and is already used in various platforms of Cognitive Computing (drones, robots, cars, etc.). Evolution is focused on further improvement in performance and scalability of the GPU to accelerate the development of Deep Neural Networks (DNN).”

On the other hand, Quantum Computing is focusing on memorizing large amounts of data in very small spaces [a quantum computer to perform classical data operations using typical phenomena of quantum mechanics, such as superposition of the effects due to atomic and subatomic particles that may exist in superimposed quantum states – editor’s note].

“For years, the power of computers has increased at the same pace at miniaturization of electronic circuits”, explains Lo Giudice. “Miniaturization of components with quantum mechanics came to a halt [in fact, quantum mechanics arrived at answers of imminent limitations related to physics, regarding silicon. In fact, many believe that Moore’s Law – according to which the number of components of an integrated circuit would double every 18-24 months – has now been invalidated: cutting silicon in 1971 was carried out with approximately 10 microns (millionths of a meter), in 2001 it was 130 nanometers (billionths of a meter) and in 2016, it is approximately 22 nanometers. According to experts, in 2018 it will be 7 nanometers and 5 nanometers in 2020, after which problems will be encountered due to use of silicon for the miniaturization of components. According to the laws of physics, the electronics would stop functioning, which is why there is already talk of quantum of materials such as graphene to replace silicon – Note]. However, its translation in the computer industry has enabled development of infrastructures with higher computing power than previous systems.”

The basic idea is to use qubits (quantum state of a particle or atom) instead of traditional binary information units (bits), which, instead of coding the two ‘open’ and ‘closed’ statuses of a switch as 0-1, it can code the binary information in two directions ‘up’ and ‘down’. For calculation purposes, the interesting aspect is that the atomic and subatomic particles can overlap, thus increasing the coding of binary information (required to solve extremely complex calculations/problems such as those at the basis of Artificial Intelligence). “In this regard, there is still a lot of work to do since it is still impossible to have actual control of atoms and particles and their interaction/communication, which make it difficult to also write out ad hoc algorithms designed to run on such systems”, admits Lo Giudice. “On the other hand, some concrete results can already be seen with regard to the so-called neuromorphic chip.”

In this case, as I explained in detail in my post “Artificial Intelligence and Cognitive Computing … new horizons”, we are dealing with a field of hardware that is definitely closer to ‘strong AI’, since this is a microchip that integrates data processing and storage in one micro component to emulate the sensory and cognitive functions of the human brain.

NOTE: Italian articles available on ZeroUno website

– Intelligenza Artificiale e Cognitive Computing: i nuovi orizzonti

– Il futuro è nelle nanotecnologie